Lab 11: Testing

Overview

In this lab, we will write unit test cases and design test plan for user testing for your project. You will work as a group and there needs to be only 1 submission per group. However, if you are absent for this lab without permission, you will receive a 0. Make sure to submit a link to your project repository to Canvas.

To receive credit for this lab, you MUST be present during the recitation. Please note that submissions will be due right before your respective lab sessions in the following week. For Example, If your lab is on this Friday 10 AM, the submission deadline will be next Friday 10 AM. There is a "NO LATE SUBMISSIONS" policy for labs. You will work on this entire lab as a group.

Learning Objectives

LO1. Appreciate the importance of having a robust test suite for your applicationL02. Learn to write a comprehensive test plan

L03. Write unit tests using Chai and Mocha

L04. Handle a variety of positive and negative test cases ranging from simple rendering to accessing authenticated routes

Part A - Pre-Lab Quiz

Complete Pre-Lab quiz on Canvas before your section's lab time.

Part B

Test Plan

A document describing the scope, approach, resources, and schedule of intended test activities.

Importance of Test Plan

- To help people outside the test team including developers, business managers, and customers understand the details of testing.

- It guides our thinking. It is like a rule book that we can follow.

- It documents important aspects of testing like test estimation, test scope, and test strategy so it can be reviewed by the management team and re-used for other projects.

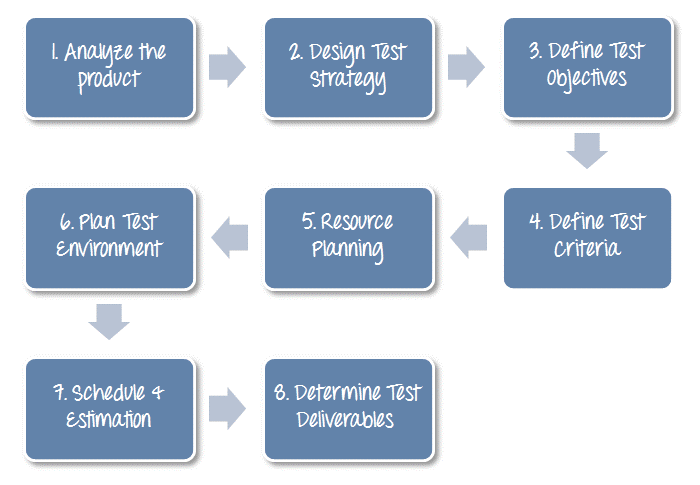

Steps to create a Test Plan

The diagram below provides an overview of how to create a Test Plan.

Types of Testing

1. Unit Testing

Unit Testing is a type of software testing where individual units or components of a software are tested. The purpose is to validate that each unit of the software code performs as expected. Unit Testing is done during the development (coding phase) of an application by the developers. Unit Tests isolate a section of code and verify its correctness. A unit may be an individual function, method, procedure, module, or object.

Today we will be writing a unit test case to test the login API in your projects. Unit Tests in NodeJS can be done using two libraries Mocha and Chai

- Mocha: A framework that allows to you methodically call the API's and functions to be tested.

- Chai: An assertion library to validate the response received from the API.

TODO: Write 2 Unit Test Cases

You will work on your project repository. If you haven't already set up the framework for your project, follow the instructions as provided below to do that before starting the lab.

1. Navigate to your project folder

Once you've created and cloned your project repository, navigate to the ProjectSourceCode/ folder. The files and directories which we will be creating below (package.json, docker-compose.yaml, .env, test/) should all be inside the ProjectSourceCode/ folder.

2. Initialize project and update package.json

If you do not already have the package.json file, run the following command to initialize a node project.

npm init

Now open the package.json file and copy the following content into the file.

TODO: Make sure to change the name of the project to your project name.

{

"name": "<TODO: project-name>",

"main": "src/index.js",

"dependencies": {

"express": "^4.6.1",

"pg-promise": "^10.11.1",

"body-parser": "1.20.0",

"express-session": "1.17.3",

"express-handlebars": "^7.1.2",

"handlebars": "^4.7.8",

"axios": "^1.1.3",

"bcryptjs": "^2.4.0"

},

"devDependencies": {

"nodemon": "^2.0.7",

"mocha": "^6.2.2",

"chai": "^4.2.0",

"chai-http": "^4.3.0",

"npm-run-all": "^4.1.5"

},

"scripts": {

"prestart": "npm install",

"start": "nodemon index.js",

"test": "mocha",

"testandrun": "npm run prestart && npm run test && npm start"

}

}

As you may have observed, we have added some additional dependencies related to mocha and chai so you can use them to write automated tests.

3. Set up docker-compose.yaml

In this lab, like in the previous labs, we will be using 2 containers, one for PostgreSQL and one for Node.js. If you need a refresher on the details of Docker Compose, please refer to Lab 1. We have updated the configuration of the db container for this lab. Copy the configuration into your docker-compose.yaml file.

version: '3.9'

services:

db:

image: postgres:14

env_file: .env

expose:

- '5432'

volumes:

- group-project:/var/lib/postgresql/data

- ./src/init_data:/docker-entrypoint-initdb.d

web:

image: node:lts

user: 'node'

working_dir: /repository

env_file: .env

environment:

- NODE_ENV=development

depends_on:

- db

ports:

- '3000:3000'

volumes:

- ./:/repository

command: 'npm start' #TODO: change the command to `npm run testandrun` to run mocha

volumes:

group-project:

4. Set up .env file

Create a .env file and copy over configuration shown in the code block below.

TODO: Make sure to replace the value for the API_KEY

# database credentials

POSTGRES_USER="postgres"

POSTGRES_PASSWORD="pwd"

POSTGRES_DB="users_db"

# Node vars

SESSION_SECRET="super duper secret!"

API_KEY="<TODO:Replace with your Ticketmaster API key if you are using lab 8 as a base>"

5. Create and initialize server.spec.js

Create a directory test within the ProjectSourceCode/ folder. Inside the created test/ directory, create a file server.spec.js.

Paste the following snippet in the file just created.

// ********************** Initialize server **********************************

const server = require('../index'); //TODO: Make sure the path to your index.js is correctly added

// ********************** Import Libraries ***********************************

const chai = require('chai'); // Chai HTTP provides an interface for live integration testing of the API's.

const chaiHttp = require('chai-http');

chai.should();

chai.use(chaiHttp);

const {assert, expect} = chai;

// ********************** DEFAULT WELCOME TESTCASE ****************************

describe('Server!', () => {

// Sample test case given to test / endpoint.

it('Returns the default welcome message', done => {

chai

.request(server)

.get('/welcome')

.end((err, res) => {

expect(res).to.have.status(200);

expect(res.body.status).to.equals('success');

assert.strictEqual(res.body.message, 'Welcome!');

done();

});

});

});

// *********************** TODO: WRITE 2 UNIT TESTCASES **************************

// ********************************************************************************

Know before you proceed!

Here are some useful definitions of what you just copied.

| describe | Begins a test suite of one or more tests. `describe` is a function that encapsulates/groups together all our test cases/expectations. The first argument is a string that describes the feature and second argument is the function to test the features. Mainly used for segmentation of code. |

| it | The it function is very similar to the `describe` function, except that we cannot add any more `it` or `describe` functions under that. This is usually the place where we include all assert, expect and should statements. |

| assert | Assert takes in two variables by default and compares them as needed. In the above mentioned example, `assert.equal` compares the value returned by `[1, 2, 3].indexOf(4)` and `-1`. It returns True if equal else returns False. Please find more details about using assert here. |

| expect | `expect` is oriented towards testing the behavior of the system rather than compare actual values. For example, in the above example, we are only interested in checking if the json object contains a property called name. |

| done(); | For testing asynchronous methods, by adding an argument (usually named done) to `it()` to a test callback, Mocha will know that it should wait for this function to be called to complete the test. |

6. Adding sample route to index.js

Add the following API to the index.js of your project. This is a dummy API to test using the code snippet given in Step 5.

app.get('/welcome', (req, res) => {

res.json({status: 'success', message: 'Welcome!'});

});

7. Using module.exports

In your index.js, replace

app.listen(3000);

with

module.exports = app.listen(3000);

Here we are exporting this server file also known as index.js, so that our test file can access it.

In Node.js programming, modules are self-contained units of functionality that can be shared and reused across projects. They make our lives as developers easier, as we can use them to augment our applications with functionality that we haven’t had to write ourselves. They also allow us to organize and decouple our code, leading to applications that are easier to understand, debug and maintain.

Hence we are exporting the welcome API as a module.

8. Starting Docker Compose

Now that we have all the files required to test the welcome API, starting docker compose is quite simple.

docker compose up

9. Shutting down containers

Now that we've learned how to start docker compose, let's cover how to shutdown the running containers. As long as you're still in the same directory, the command is as shown below. The -v flag ensures that the volume(s) that are created for the containers are removed along with the containers. In this lab, you need to use the -v flag only if you want to reinitialize your database with an updated create.sql. Otherwise, you are not required to do so.

docker compose down -v

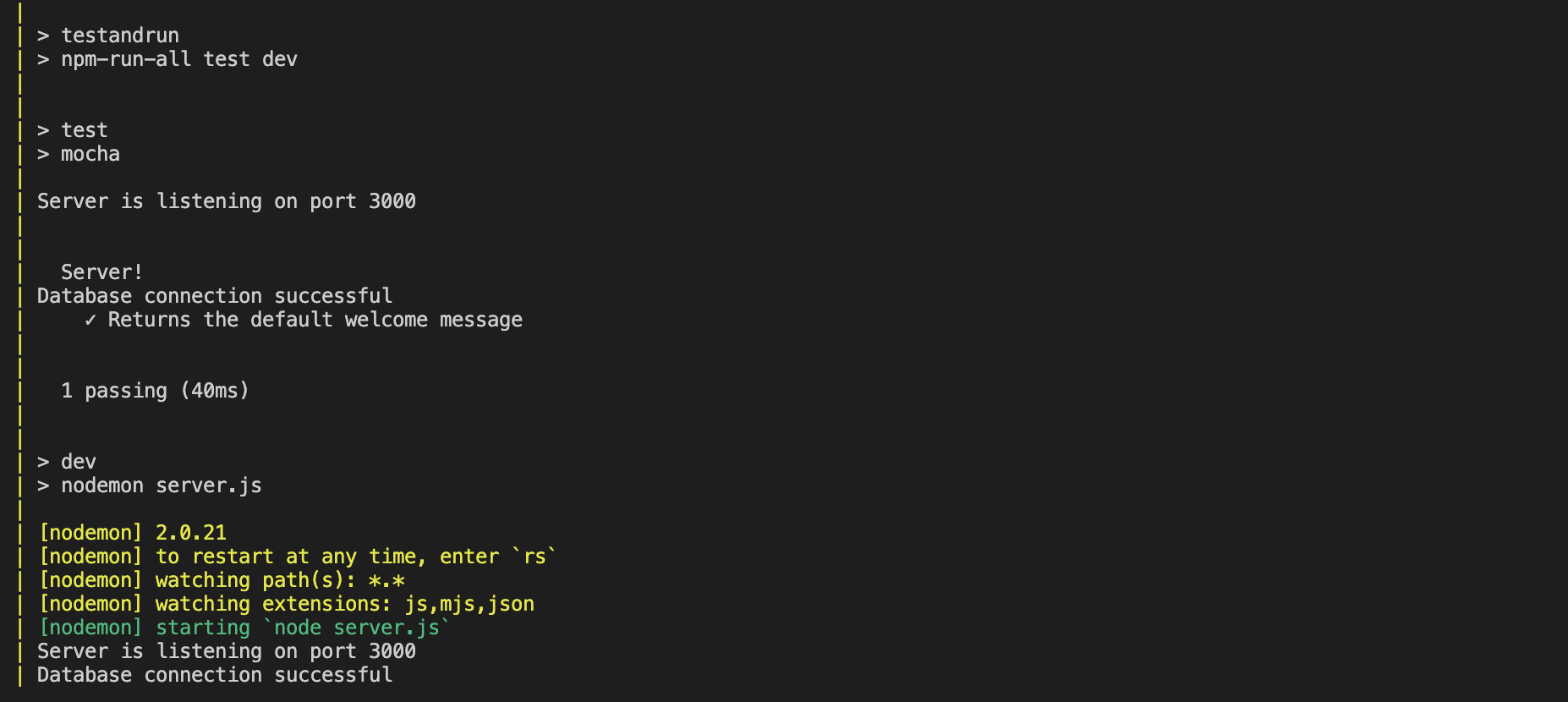

10. Check your terminal

After you start the docker-compose, you will see the welcome test case result in your terminal.

PASS:

11. Restarting Containers

For development purposes, it's often required to restart the containers, especially if you make changes to your initialization files. The testcases will first get executed before starting the server. If you want to run the testcases or every time you make a change to any of the testcases, you should restart your containers so that the updates are reflected in the web container.

In your project, please be careful before running the following command:

docker compose down --volumes.

This would create the database from scratch, which means any inserts that you have done after running the app will be removed. If the inserts are a part of init_data then it would be added again when the db container restarts.

How to check your docker logs?

Check this guide out to debug your docker.

docker compose down --volumes

docker compose up

12. Write 2 test cases for the /register API in your website.

For writing the testcases for the /register API, we would need the following:

1. Register API in index.js: Copy the register API from Lab 8 or write a new register API in the index.js for your project.

2. users table in create.sql: We will be needing the users table to complete this task. Go ahead and copy the query to create a users table from Lab 8 and add it to ProjectSourceCode/init_data/create.sql

Now let's start writing the testcases. We will be writing 1 Positive Testcase and 1 Negative Testcase.

i. Positive Test Case: The test case should pass and the expected task must be completed.

Write a positive test case to test your API's correctness. You will pass a valid email id/username and password to the API as input and verify whether its getting properly inserted into the users table. Use the following POST API test case example and edit it based on your API.

Remember that you need to make some modifications to this test case OR the route that adds new users. It won't work if you directly copy and paste the code given below. What is your registration route actually called? Does it return a 200 status when user registration is successful?

In test driven development (TDD), we write our tests first and then write the code to satisfy the test. Alternatively, in the spirit of TDD, you can modify your register route to align with the test shown here.

Example Code:

// Example Positive Testcase :

// API: /add_user

// Input: {id: 5, name: 'John Doe', dob: '2020-02-20'}

// Expect: res.status == 200 and res.body.message == 'Success'

// Result: This test case should pass and return a status 200 along with a "Success" message.

// Explanation: The testcase will call the /add_user API with the following input

// and expects the API to return a status of 200 along with the "Success" message.

describe('Testing Add User API', () => {

it('positive : /add_user', done => {

chai

.request(server)

.post('/add_user')

.send({id: 5, name: 'John Doe', dob: '2020-02-20'})

.end((err, res) => {

expect(res).to.have.status(200);

expect(res.body.message).to.equals('Success');

done();

});

});

});

The /register API may be different in your implementation. Make sure to make necessary edits to the following example before attempting to run it.

After making the required changes to the above testcase based on your Register API, re-run docker and you should see the result.

ii. Negative Test Case: The test case should pass and the expected task must not be completed. You are testing whether your API recongizes incorrect POSTs and responds with the appropriate error message.

- Write a negative test case to test your API's correctness. You will pass an invalid email id/username and password to the API as input and verify whether the API is throwing an 400 http status code. Use the following POST API test case example and edit it based on your API.

Example Code:

//We are checking POST /add_user API by passing the user info in in incorrect manner (name cannot be an integer). This test case should pass and return a status 400 along with a "Invalid input" message.

describe('Testing Add User API', () => {

it('positive : /add_user', done => {

// Refer above for the positive testcase implementation

});

// Example Negative Testcase :

// API: /add_user

// Input: {id: 5, name: 10, dob: '2020-02-20'}

// Expect: res.status == 400 and res.body.message == 'Invalid input'

// Result: This test case should pass and return a status 400 along with a "Invalid input" message.

// Explanation: The testcase will call the /add_user API with the following invalid inputs

// and expects the API to return a status of 400 along with the "Invalid input" message.

it('Negative : /add_user. Checking invalid name', done => {

chai

.request(server)

.post('/add_user')

.send({id: '5', name: 10, dob: '2020-02-20'})

.end((err, res) => {

expect(res).to.have.status(400);

expect(res.body.message).to.equals('Invalid input');

done();

});

});

});

When to Use HTTP Status Code 400

- Malformed Syntax: Use 400 when the client's request message fails to follow the syntax expected by the server, such as missing or incorrect parameters in the request.

- Invalid Parameters: Use 400 when the request contains invalid parameters, such as out-of-range values or unsupported formats, preventing the server from processing the request.

- Client-Side Errors: Use 400 when the error is due to client-side issues, indicating that the client needs to correct the request before resubmitting.

In summary, use the 400 status code to indicate that the client's request cannot be fulfilled due to syntax errors or invalid parameters, and the client needs to modify the request to proceed.

To learn more about use-cases for other HTTP status codes, please visit this page: MDN Web Documentation - HTTP Status

TO-DO: Write Unit Tests

- Write a positive test case to test your API's correctness. You will pass a valid email id/username and password to the API as input and verify whether its getting properly inserted into the users table. Use the above example POST API test case example and edit it based on your API.

- Write a negative test case to test your API's correctness. You will pass an invalid email id/username and password to the API as input and verify whether the API is throwing an 400 http status code. Use the above example POST API test case example and edit it based on your API.

13. Examples for testing Redirect and Render

i. Testing redirect to another API:

Example Code:

describe('Testing Redirect', () => {

// Sample test case given to test /test endpoint.

it('\test route should redirect to /login with 302 HTTP status code', done => {

chai

.request(server)

.get('/test')

.end((err, res) => {

res.should.have.status(302); // Expecting a redirect status code

res.should.redirectTo(/^.*127\.0\.0\.1.*\/login$/); // Expecting a redirect to /login with the mentioned Regex

done();

});

});

});

Here's a brief explanation of the code for testing redirect():

describe('Redirect testing', () => { ... });: This defines a test suite named 'Redirect testing' which contains one or more test cases related to redirect functionality.it('\test route should redirect to /login with 302 HTTP status code', done => { ... });: This defines a single test case within the test suite. The test case description indicates that it's testing the '/test' route for a redirect to '/login' with a 302 HTTP status code.chai.request(server).get('/test').end((err, res) => { ... });: This makes a GET request to the '/test' route of the Express.js application using the Chai HTTP plugin.res.should.have.status(302);: This assertion checks that the response has a status code of 302, indicating a redirect.res.should.redirectTo(/^.*127\.0\.0\.1.*\/login$/);: This assertion verifies if the response redirects to a URL that conforms to the provided Regex pattern. The Regex pattern ensures the URL contains '127.0.0.1' (your localhost) followed by '/login'. Furthermore, when a string parameter is added, this assertion validates that the absolute redirect URL matches the provided string parameter.done();: This is a callback function provided by Mocha to indicate that the asynchronous test is complete.

Overall, this test case ensures that when a request is made to the '/test' route, the application responds with a redirect to the '/login' route with a 302 HTTP status code.

ii. Testing Render:

Example Code:

describe('Testing Render', () => {

// Sample test case given to test /test endpoint.

it('test "/login" route should render with an html response', done => {

chai

.request(server)

.get('/login') // for reference, see lab 8's login route (/login) which renders home.hbs

.end((err, res) => {

res.should.have.status(200); // Expecting a success status code

res.should.be.html; // Expecting a HTML response

done();

});

});

});

Here's a brief explanation of the code for testing render()::

describe('Render testing', () => { ... });: This defines a test suite named 'Render testing' which contains one or more test cases related to rendering functionality.it('test "/login" route should render with an html response', done => { ... });: This defines a single test case within the test suite. The test case description indicates that it's testing the '/test' route for rendering with an HTML response.chai.request(server).get('/login').end((err, res) => { ... });: This makes a GET request to the '/test' route of the Express.js application using the Chai HTTP plugin.res.should.have.status(200);: This assertion checks that the response has a status code of 200, indicating success.res.should.be.html;: This assertion checks that the response is in HTML format.done();: This is a callback function provided by Mocha to indicate that the asynchronous test is complete.

Overall, this test case ensures that when a request is made to the '/test' route, the application responds with an HTML page and a status code of 200.

2. Integration Testing

A type of testing where software modules are integrated logically and tested as a group. A typical software project consists of multiple software modules, coded by different programmers. The purpose of integration testing is to expose defects in the interaction between these software modules when they are integrated.

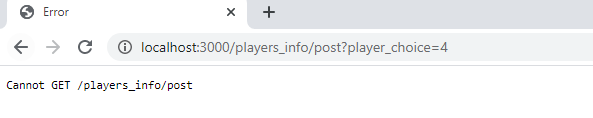

Below is the example case where integration of two pieces of code by programmers A and B failed :

- Programmer A developed the front-end (i.e., the .hbs files in the views folder) and provided a reference for the dropdown action '/players_info/post' .

- Programmer B developed the back-end (i.e., the server.js file) to handle retrieving data from the database and returning the correct data for the '/player_info/post' request.

When both pieces of code are integrated and the application is used, an error occurs :

Can you identify what caused this error?

Look at the routes used by Programmer A and Programmer B; there is a typo. The route used by Programmer A is '/players_info/post' while the route used by Programmer B is '/player_info/post' . This is one of the common mistakes that happens when programmers work on different parts of the code. This is why we need to perform integration testing.

3. User Acceptance Testing

This is the last phase of the software testing process. During UAT, actual users test the software to make sure it can handle the required tasks in real-world scenarios, according to the specifications.

Below are some sample test cases for UAT testing:

- User should be able to login with correct credentials.

- User authentication fails when the user provides invalid credentails.

- The form provides the user with specific feedback about the error.

Acceptance Criteria:

Acceptance criteria refers to a set of predefined requirements that must be met in order to mark a user story as complete. Below is an example :

- A user cannot submit a form without completing all of the mandatory fields. Mandatory fields include:

- Name

- Email Address

- Password

- Confirm Password

- Information from the form is stored in the

usertable in thefootball_dbdatabase.

TODO: Create UAT plans for at least 3 features

You have to execute this test plan in week 4 of your project. So it would be ideal if your test plan is well thought through as it will expedite the testing process.

- Create 1 document per team, within the milestones folder in the project directory, that describes how, at least, 3 features within your finished product will be tested.

- The test plans should include specific test cases (user acceptance test cases) that describe the data and the user activity that will be executed in order to verify proper functionality of the feature.

- The test plans should include a description of the test data that will be used to test the feature.

- The test plans should include a description of the test environment ( localhost / cloud ) that will be used to test the feature.

- The test plans should include a description of the test results that will be used to test the feature.

- The test plan should include information about the user acceptance testers.

- The actual test results based on observations after executing the tests. Your team will include these results in the final project report.

Risks

A future, uncertain event with a probability of occurrence and a potential for loss.

Types of Project Risks

- Organizational Risks: Occurs due to lack of resources to complete the project on time, lack of skilled members in team, etc.

- Technical Risks: Occurs due to untested code, improper implementation of test cases, limited test data, etc.

- Business Risks: External risk (i.e., from company / customer not from your project) like budget issues, etc.

Part C

TODO: Write additional 2 Unit Testcases

Write two unit test cases (1 positive and 1 negative) for any of the API endpoints, apart from register, in your project.

If your APIs requires maintaining session to be accessed, checkout the example below to get cookies from the login API and set the cookies in all other requests.

Consider a simple profile route post authentication as shown here. Remember, a user will have to login to be able to access this route.

// Authentication Required

app.use(auth);

app.get('/profile', (req, res) => {

if (!req.session.user) {

return res.status(401).send('Not authenticated');

}

try {

res.status(200).json({

username: req.session.user.username,

});

} catch (err) {

console.error('Profile error:', err);

res.status(500).send('Internal Server Error');

}

});

How would the tests look for this route? We would need to mock the login process and check if we are recieving the profile info from the server.

We can use an intermediate agent to support the testing. Here's a step-by-step break down of the code shown below:

- Create an agent through which authenticated requests will be made

- Set up test user credentials for authentication testing

Before all tests (before hook):

- Clear the users table to ensure a clean state

- Create a test user with hashed password in the database

Before each test (beforeEach hook):

- Create a fresh agent instance for handling session cookies

After each test (afterEach hook):

- Close the agent to clear cookies and session data

After all tests (after hook):

- Clean up by truncating the users table

Test scenarios for GET /profile endpoint:

- Test unauthorized access (should return 401)

- Test authenticated access: Login first to establish a session Make request to profile endpoint Verify successful response with user data

Example Code:

describe('Profile Route Tests', () => {

let agent;

const testUser = {

username: 'testuser',

password: 'testpass123',

};

before(async () => {

// Clear users table and create test user

await db.query('TRUNCATE TABLE users CASCADE');

const hashedPassword = await bcryptjs.hash(testUser.password, 10);

await db.query('INSERT INTO users (username, password) VALUES ($1, $2)', [

testUser.username,

hashedPassword,

]);

});

beforeEach(() => {

// Create new agent for session handling

agent = chai.request.agent(app);

});

afterEach(() => {

// Clear cookie after each test

agent.close();

});

after(async () => {

// Clean up database

await db.query('TRUNCATE TABLE users CASCADE');

});

describe('GET /profile', () => {

it('should return 401 if user is not authenticated', done => {

chai

.request(app)

.get('/profile')

.end((err, res) => {

expect(res).to.have.status(401);

expect(res.text).to.equal('Not authenticated');

done();

});

});

it('should return user profile when authenticated', async () => {

// First login to get session

await agent.post('/login').send(testUser);

// Then access profile

const res = await agent.get('/profile');

expect(res).to.have.status(200);

expect(res.body).to.be.an('object');

expect(res.body).to.have.property('username', testUser.username);

});

});

});

Resources

Submission Guidelines

All work for this lab will be done as a group. Make sure to update your project directory with the newly created files.

Commit and upload your changes

- Run the following commands inside your project git directory (that you created).

git add .

git commit -m "Adding automated tests and test plan for the project"

git push

Go to Github and verify that all the files/folders for the lab have been successfully uploaded to the remote project repository.

Upload a link to the project repository on Canvas. One submission per group is sufficient. If there is no link on Canvas, that will be evaluated to a "NO SUBMISSION", leading to a 0 for this lab, for the entire team. So please make sure that at least one person uploads the link on Canvas.

Regrade Requests

Please use this link to raise a regrade request if you think you didn't receive a correct grade. If you received a lower than expected grade because of missing/not updated files, please do not submit a regrade request as they will not be considered for reevaluation.

Rubric

| Description | Points | |

|---|---|---|

| PART A: Pre-Lab Quiz | Complete the Pre-Lab Quiz before your lab | 20 |

| Part B: Unit Test Cases | Positive and negative test cases written for the /register endpoint on the server | 20 |

| Part B: UAT Plan | The test plan is well written for at least 3 features and includes all the necessary information | 20 |

| Part C: Additional Unit Testcases Test Cases | Positive and negative test cases written for an endpoint other than /register on the server | 20 |

| In class check-in | Show your work to TA or CM. | 20 |

| Total | 100 |